Updated September 13, 2023

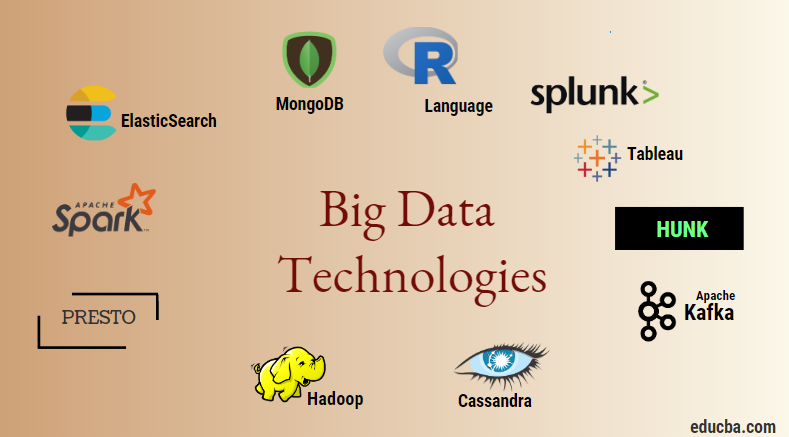

Introduction to Big Data Technologies

Big data technology and Hadoop is as big buzzword as it might sound. As there has been a huge increase in the data and information domain from every industry and domain, it becomes very important to establish and introduce an efficient technique that takes care of all the needs and requirements of clients and big industries responsible for data generation. Previously, normal programming languages and simple structured query language handled the data, but now, these systems and tools don’t seem to accomplish much in the case of big data.

Big data technology encompasses technology and software utilities designed for analyzing, processing, and extracting information from large sets of extremely complex structures and extensive data sets, which traditional systems find challenging to manage. It serves the purpose of handling both real-time and batch-related data. Machine learning has become a critical component of everyday lives and every industry, so managing data through big data has become very important.

Table of Contents

Types of Big Data Technologies

Before starting with the list of technologies, let us first see the broad classification of all these technologies.

They can mainly be classified into four domains.

- Data storage

- Analytics

- Data mining

- Visualization

Let us first cover all the technologies that come under the storage.

Next, let us talk about the different fields of big data technology, i.e., Data Mining.

Now let us read about all those big data technologies that are a part of Data analytics:

It is a workflow scheduler system to manage Hadoop jobs. Scheduled workflow jobs configure Directed Acyclic Graphs (DAGs) to perform actions. It’s a scalable and organized solution for big data activities.

This is a platform that schedules and monitors the workflow. Smart scheduling helps in organizing and executing the project efficiently. Airflow possesses the ability to rerun a DAG instance when there is an instance of failure. Its rich user interface makes it easy to visualize pipelines running in various stages like production, monitor progress, and troubleshoot issues when needed.

It’s a unified model to define and execute data processing pipelines, including ETL and continuous streaming. Apache Beam framework provides an abstraction between your application logic and big data ecosystem, as no API binds all the frameworks like Hadoop, Spark, etc.

Let us now discuss the technologies related to Data Visualization.

These are the emerging technologies that help applications run in Linux containers. Docker is an open-source collection of tools that help you “Build, Ship, and Run Any App, Anywhere”.

Kubernetes is also an open-source container/orchestration platform, allowing large numbers of containers to work together in harmony. This ultimately reduces the operational burden.

It’s an open-source machine learning library that designers, builders, and trainers of deep learning models use. It conducts all computations in TensorFlow using data flow graphs. Graphs comprise nodes and edges. Nodes represent mathematical operations, while the edges represent the data.

TensorFlow is helpful for research and production. The developers built it with the capability to run on multiple CPUs, GPUs, and even mobile operating systems. They could implement it in Python, C++, R, and Java.

Polybase works on top of SQL Server to access data stored in PDW (Parallel Data Warehouse). PDW processes any volume of relational data and integrates it with Hadoop.

People use Hive as a platform for querying and analyzing large datasets. It provides a SQL-like query language called HiveQL, which is converted internally into MapReduce and then processed.

With the rapid growth of data and the organization’s huge strive for analyzing big data, Technology has brought in so many matured technologies into the market that knowing them is of huge benefit. Nowadays, Big data Technology addresses many business needs and problems by increasing operational efficiency and predicting the relevant behavior. A career in big data and its related technology can open many doors of opportunities for the person as well as for businesses.

Henceforth, it’s high time to adopt big data technologies.

Conclusion

Big Data technologies have revolutionized data management and analytics, enabling organizations to harness vast information for valuable insights. They offer scalability, real-time processing, and diverse data storage and analysis tools. Embracing these technologies is essential for staying competitive in the data-driven era, driving innovation and informed decision-making.

FAQs

Q1. How can I get started with Big Data technologies?

Ans: You can learn programming languages such as Python and Java by studying relevant frameworks and tools, taking online courses, or working on personal or open-source projects. Additionally, cloud providers often offer free tiers for experimentation.

Q2. What are the future trends in Big Data technologies?

Ans: In the future, we may see AI and machine learning being integrated with Big Data, edge computing being used for real-time processing, an increase in the use of graph databases, and more automation in data management and analysis.

Q3. What industries benefit the most from Big Data technologies?

Ans: Big Data technologies have applications in various industries, including finance, healthcare, retail, e-commerce, marketing, logistics, and manufacturing.

Q4. How does cloud computing relate to Big Data?

Ans: Companies can utilize cloud providers for their Big Data needs, as they offer flexible and affordable infrastructure for storing and processing large amounts of data. Some popular cloud services for this include Amazon S3 and EC2 from AWS, Azure Data Lake and HDInsight from Microsoft’s Azure, and Google Cloud’s Dataprep and BigQuery. Big Data solutions often make use of these services.

Recommended Articles

We hope that this EDUCBA information on “Big Data Technologies” was beneficial to you. You can view EDUCBA’s recommended articles for more information.