Updated June 9, 2023

Introduction to Sqoop Commands

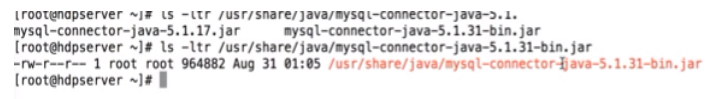

In Sqoop Commands, every row is treated as records, and the tasks are subdivided into subtasks by Map Task Internally. The databases supported by sqoop are MYSQL, Oracle, IBM, and PostgreSQL. Sqoop provides a simple command line; we can fetch data from the different databases through sqoop commands. They are written in Java and use JDBC for connection to other databases.

It is an open-source tool for SQL to Hadoop and Hadoop to SQL. It is an application com connectivity tool that transfers bulk data between the relational database system and Hadoop (Hive, map-reduce, Mahout, Pig, HBase). They allow users to specify target locations inside Hadoop and make a sqoop to move data from RDMS to target. They provide Optimized MySQL connectors that use database-specific API to do bulk transfers completely. The user imports data from external sources like Hive or Hbase. The sqoop has two file formats: delimited text file format and sequence file format.

Basic Commands of Sqoop Commands

The basic commands are as explained below:

1. List table

This command lists the particular table of the database in the MYSQL server.

Example:

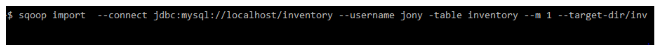

2. Target directory

This command imports a table in a specific directory in HDFS. -m denotes mapper argument. They have an integer value.

Example:

3. Password protection

Example:

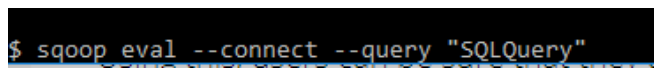

4. sqoop-eval

This command runs quickly SQL queries of the respective database.

Example:

5. sqoop – version

This command displays the version of the sqoop.

Example:

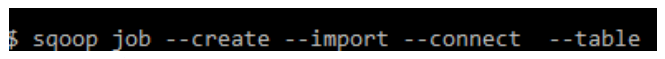

6. sqoop-job

This command allows us to create a job; the created parameters can be invoked anytime. They take options like (–create,–delete,–show,–exit).

Example:

7. Loading CSV file to SQL

Example:

8. Connector

Example:

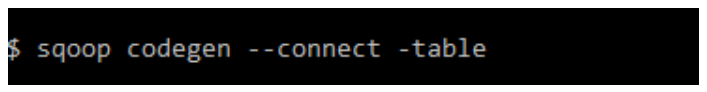

9. code gen

This Sqoop command creates Java class files that encapsulate the imported records. All the Java files are recreated, and new versions of a class are generated. They generate code to interact with database records. Retrieves a list of all the columns and their data types.

Example:

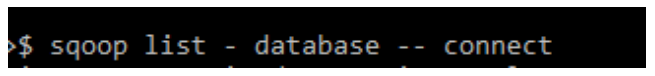

10. List Database

This Sqoop command lists all the available databases in the RDBMS server.

Example:

Intermediate Commands of Sqoop Commands

The intermediate commands are as follows:

1. sqoop -meta store

This command host a shared metadata repository. Multiple /remote users can run several jobs.

Command:

$sqoop .metastore.client.autoconnect.url

example: jdbc:hsqldb:hsql://metastore .example.com/sqoop

2. sqoop -help

This command lists the tools available in sqoop and their purpose.

Command:

$ sqoop help

$ bin/sqoop help import

3. Exporting

This command exports data from HDFS to the RDMS Database. In HDFS, data are stored as records.

Command:

$ sqoop export\–connect jdbc: mysql://localhost/inventory – username jony –table lib –export -dir/user/jony/inventory.

4. Insert

This command inserts a new record from HDFS to the RDBMS table.

Command:

$ sqoop export –connect JDBC:MySQL://localhost/sqoop_export – table emp_exported –export -dir/sqoop/newemp -m -000

5. Update

This command updates the records in the RDBMS from HDFS data.

Command:

$ sqoop export –connect JDBC: MySQL://localhost/sqoop_export – table emp_exported –export -dir/sqoop/newemp -m -000 –update -key id

6. Batch Option

This command inserts multiple rows together; they optimize the insertion speed by using the Sqoop JDBC driver.

Command:

$ sqoop export \ -connect JDBC: MySQL://hostname/ <db-name>–username -password -export -dir

7. Split

When this command is used, the where clause is applied to the entire SQL.

Command:

$sqoop import -D mysql://jdbc :// where.clause.location =SPLIT –table JUNK –where “rownum<=12”

8. AVRO file into HDFS

They store RDBMS Data as an Avro file.

Command:

$ sqoop import –connect JDBC: MySQL://localhost/Acadgild –username root –password pp.34 –table payment -m1 –target -dir/sqoop_data/payment/avro/ ==as -avrodatfile.

Advanced Commands

The advanced commands are as follows:

1. Import Commands

Import commands have Import control arguments. The various arguments are as follows:

- boundary: used for creating splits.

- as – text file: imports plain text data

- -columns (<col,col> : import columns for table

- -m,- num: to import parallel mapping tasks

- split-by: Splits column of the table

- -z,- compress: compression of the data is enabled.

Incremental Import Arguments:

- check – column: Indicates columns to determine which rows to be imported.

- incremental (mode): indicates new rows (include append and last modifies rows)

Output Line Arguments:

- lines -terminated -by <char>: They set eol character

- MySQL – delimiters: they set \n fields: lines:

2. Import to Hive

-hive – import: They import tables into hive

-hive – partition-key: Name of the partition is shared.

-hive – overwrite: They overwrite the data in the existing table.

3. Import to Hbase Arguments

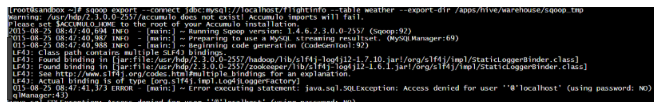

-accumulo-table <tablename>: This specifies the target table in HBase.

-accumulo -column<family>: To import, it sets the target column.

-accumulo -<username>: To import name of the accumulo

–accumulo -<password >: To import password of the accumulo

4. Storing in Sequence Files

$ sqoop import -connect jdbc:mysql ://db.foo.com/emp -table inventory\ – class-name com.foo.com.Inventory -as – sequencefile

5. Query import

This command specifies the SQL statement with the -query argument.

$sqoop import \ –query ‘SELECT a.*,b.* from a JOIN b on (a.id=b.id) where $ conditions’\ -split – by /target-dir/user

6. Incremental exports

$ sqoop export –connect –table –username –password –incremental –check-row –last-value

7. Importing all tables to HDFS

$ sqoop import -all – tables –connect jdbc: mysql:// localhost /sale_db — username root.

8. Importing data to hive

$ sqoop import –connect –table –username –password –hive -import – hive -table

9. Importing data to HBase

Command:

$ sqoop import –connect –table –username –password –hive -import – HBase -table

10. Encode null values

Command:

$ mysql import\–connect JDBC: MySQL://mysql.ex.com/sqoop\–username sqoop\ -password sqoop\–table lib\ –null -string’

Tips and Tricks

If we want to execute the data operations effectively, we must use sqoop; just through a single command line, we can perform many tasks and subtasks. Sqoop connects to different relational databases through connectors, using the JDBC driver to interact with them. Since sqoop runs on its source, we can execute sqoop without an installation process. The execution of sqoop is easy because it executes the data in parallel. We can import and export data using Map Reduce, providing parallel execution.

Conclusion

To conclude, it regulates the process of importing and exporting the data. Sqoop provides the facility to update the parts of the table by the incremental load. The data import in sqoop is not event-driven. And there comes sqoop2 with enabled GUI for easy access and command line. The data transfer is fast as they transfer in parallel. They play a vital role in the Hadoop environment. They do their job independently, not necessarily while importing small data sets.

Recommended Articles

This has been a guide to Sqoop Commands. Here we discussed basic, intermediate, and advanced Sqoop Commands and tips and tricks. You may also look at the following articles to learn more –